A new viewpoint published in JAMA by Palmieri et al in December 2025 discussed the new guidance on safe implementation of AI healthcare by the Joint Commission (TJC, the primary US hospital accreditor and CHAI).

Here is the guideline: https://digitalassets.jointcommission.org/api/public/content/dcfcf4f1a0cc45cdb526b3cb034c68c2

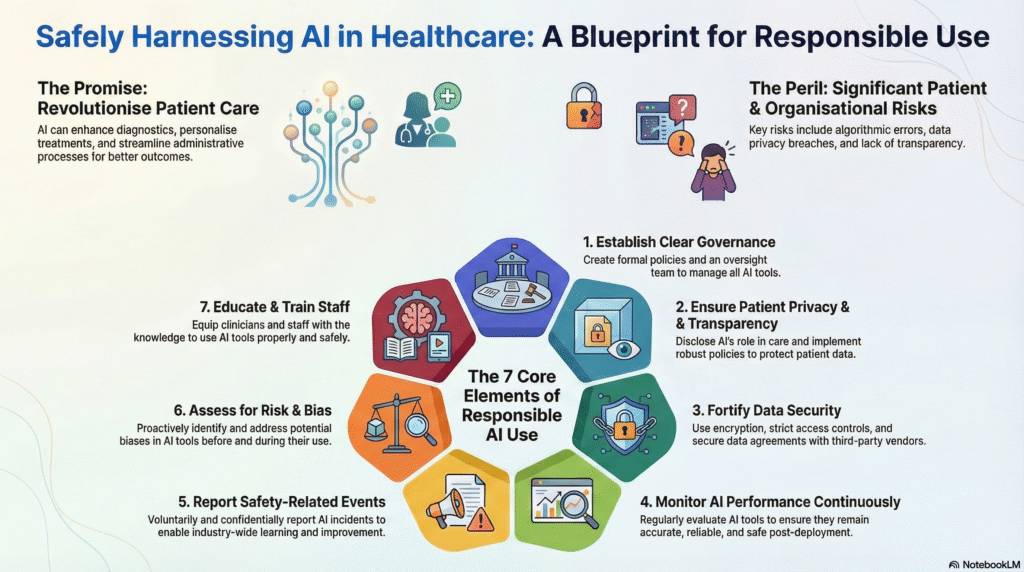

Article acknowledge the rapid advancement of AI in healthcare (clinical application, diagnostics, ambient scribing, and decision support, etc.) has potential gains but brought new risks, with little accountability.

The guideline summary:

| AI Policies and Governance Structures | Organizations must establish a formal governance structure to oversee AI safety, risk management, and compliance. This includes designating specific individuals with technical expertise to lead AI implementation. Leaders should create policies for ethical standards, safety protocols, and regular reviews to keep up with changing regulations |

| Patient Privacy and Transparency | Strict policies must protect patient data from unauthorized access or release. To build trust, organizations must be transparent, notifying patients when AI affects their care and explaining how their data is used. Where relevant, patient consent should be obtained |

| Data Security and Data Use Protections | Organizations must secure data through methods like encryption (both in transit and at rest) and strict access controls. Data Use Agreements with third parties should explicitly define permitted uses, require data minimization, and prohibit re-identification of de-identified data. Compliance with HIPAA and regular security assessments are mandatory. |

| Ongoing Quality Monitoring | Teams must continuously monitor AI tools after deployment because data inputs and algorithms can change over time. This involves validating local performance, checking for bias, and ensuring the tool remains accurate for the specific clinical setting. Monitoring should be risk-based; tools driving clinical decisions require more frequent checks than administrative tools. |

| Voluntary, Blinded Reporting of AI Safety-Related Events | Organizations should treat AI errors or “near misses” as patient safety events. These events should be reported voluntarily and confidentially to independent bodies (like Patient Safety Organizations) to allow the industry to learn without fear of liability. |

| Risk and Bias Assessment | Processes must be in place to identify biases in AI tools that could threaten safety or limit access to care. Teams should verify if the AI was trained on representative data and test it against the specific populations the hospital serves. Vendors should be asked to disclose known risks and limitations, potentially using tools like an “AI Model Card” |

| Education and Training | All relevant staff and clinicians must receive training on the proper use, benefits, and limitations of AI tools. Training should be role-specific and include information on how to access support and policy documentation |